I started this project on 10/10/24 following a Project Pitching event at my University (UCSB), in preparation to this project I earned a certificate in course called

Computer Vision for Data Scientists on LinkedIn Learning and combined with prior experience from projects in the past (check out https://jaekop.me wink wink)

I was able to develop a basic model that has been developed over the course of this month.

My original idea for this project was a very simple model that consisted of 4 files .

- collect.py

- Basically used a live camera (cv2) to take n amount of photos and created m amount of folders. It started off very simple with 720p quality and about 100 images for all of the signs

- create_dataset.py

- The original idea of this file was to process each image using mediapipe's hand landmark detection. Then sort through every image of each folder and create a pickle file to organize the model data.

And to apply filters to help process the data in a more isolated way to the hand.

- train_class.py

- Originally I trained the pickle data on a Random Forest classifier, a more easy and general usage classifier. The models are trained using train_test_split with stratify to handle class balance.

- inf_clas.py

- This basically just ran the model and created the hand landmarks and other stuff. It had the dictionary that turned the folder number it detected into each given sign.

Begin of Change Log

I ran into the issue of overfitting, I mean it was bound to happen with images that didn't vary much since I recorded them live. It would read a flat hand as a finger gun, it had a lot to do with the landmark detection of mediapipe but I didn't really diagnose that until much later. So then I did some tweaking to min_samples_split and max_depth and some parameters to the RandomForest classifier and it helped a bit but not that much. I started pondering about making some changes to the mediapipe and changed the min_detection_confidence to extremely low numbers to no avail.

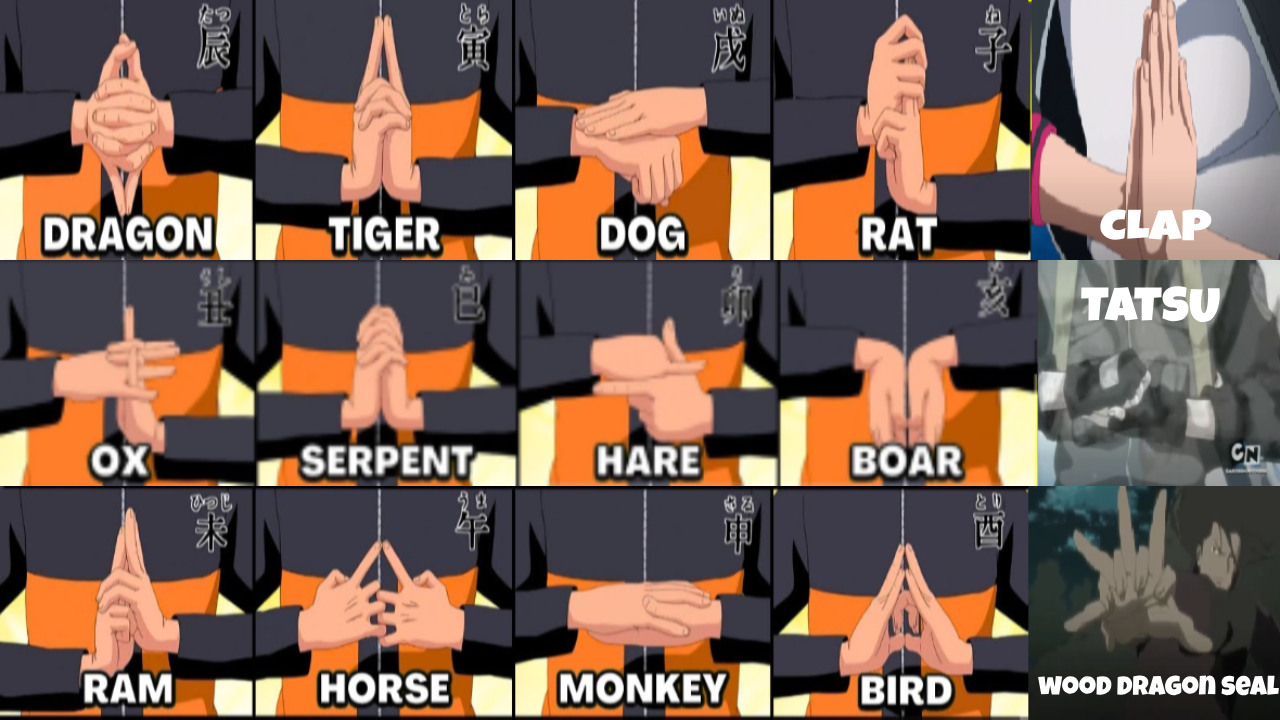

I decided to change the classifier, to change RandomForest to a classifier more built for real-time detection. The YOLO series, I decided to pick a bit older and well documented version for troubleshooting reasons: being YOLOv5. Meaning I have to change the way I collected data from pickle to annotations with val and label folders. and to download the model I chose a smaller one or yolov5s.pt since I'm only detecting 12 handsigns. But I had many issues detecting just the hands so I made some changes to the yolov5's train.py and other files that control how the classifier is trained. I edited what it recognizes and made some tweaks to collect.py to focus more on the hands and infer less patterns in the background. And then I finally realized that it was the hand-tracking of mediapipe that was not detecting the overlapping that many of these handsigns have. I decided to use the general pattern recognition of YOLOv5 instead of depending on the landmarks of mediapipe that were ultimately not capable of handling the complexities of overlapping hands. After messing around with the datasets, I realized that the quality of data I had was very bad, meaning I was to add more variance through color filters and start using Roboflow which is a website that allows me to create annotations for the YOLOv5 model to learn from. Since I adapted the use of Roboflow my process was streamlined so heavily I didn't even need half of the python files anymore. I also tweaked the collect.py to rotate images randomly 15 degrees clockwise and counterclockwise and randomized the hue. Also made it screenshot every 5 frames to try to counter overfitting. As of 11/7/24 I got my program to run for the very first time and perform jutsu's. I still face the issue of recognizing handsigns that are sort of similar. Especially Ram and Tiger, Dragon and Rat, and lastly Ox, Hare, and Dog for some reason. I need to diversify data especially with different shirts and skin tones. AS OF 12/8/2024 I GOT FLASK TO BE ABLE TO DISPLAY MY MODEL ON MY LOCAL MACHINE WOOHOO!